AI content generation tools track performance through multi-dimensional analytics systems that measure engagement, conversion, distribution effectiveness, and business impact metrics. These platforms use integrated dashboards to collect data across channels, apply machine learning algorithms to detect patterns, and generate actionable insights for content optimization. Tracking typically combines real-time monitoring with scheduled analysis cycles to balance immediate adjustments with strategic improvements. Let’s explore the key aspects of how these systems work.

What metrics do AI content generation systems measure?

AI content generation systems measure four primary metric categories: engagement metrics, conversion metrics, content distribution metrics, and business impact metrics. Engagement metrics include time on page, scroll depth, bounce rate, and interaction events. Conversion metrics track form completions, downloads, sign-ups, and purchases. Distribution metrics monitor reach, impressions, and channel performance. Business impact metrics connect content directly to revenue, customer acquisition costs, and lifetime value.

The most advanced AI content platforms go beyond basic analytics by measuring content quality indicators like relevance scores, readability assessments, and sentiment analysis. These deeper metrics help you understand not just if your content is being consumed, but how effectively it’s meeting audience needs.

For example, when tracking blog content, these systems might analyze which sections readers spend the most time on, which calls-to-action generate the most conversions, and how traffic sources affect engagement patterns. This multi-dimensional view helps you optimize both the creative elements and distribution strategies simultaneously.

Many platforms also incorporate competitive intelligence, allowing you to benchmark your content performance against industry standards or direct competitors. This comparative data provides context for your metrics and helps identify realistic improvement opportunities based on market conditions.

How do AI algorithms analyze content performance data?

AI algorithms analyze content performance data through pattern recognition, predictive modeling, natural language processing, and automated segmentation. These systems process vast amounts of structured and unstructured data to identify correlations between content elements and performance outcomes. Machine learning models continuously improve their accuracy by learning from new data inputs and feedback loops, adapting to changing audience behaviors and market conditions.

The analysis process typically begins with data normalization, where algorithms standardize metrics across different channels and formats to enable fair comparisons. For instance, engagement on a social post is weighted differently than engagement on a long-form article, but AI systems create equivalencies that allow for meaningful analysis.

Natural language processing (NLP) capabilities enable these systems to understand content context, sentiment, and topical relevance. This means the AI can determine not just which content performs well, but why it resonates with specific audiences. The algorithms identify patterns in successful content – whether that’s particular writing styles, image types, or structural elements – and generate recommendations for future content creation.

Advanced systems also employ A/B testing automation, where the AI can design, implement, and analyze content experiments without manual intervention. These self-optimizing systems continuously test variations to maximize performance against your defined objectives.

When should companies monitor AI-generated content results?

Companies should monitor AI-generated content results through a structured cadence that includes real-time tracking, weekly performance reviews, monthly trend analysis, and quarterly strategic assessments. Real-time monitoring catches immediate issues like technical problems or negative audience reactions. Weekly reviews identify short-term performance patterns and optimization opportunities. Monthly analysis reveals deeper trends and audience preference shifts. Quarterly assessments connect content performance to broader business goals.

The optimal monitoring schedule depends on your content volume and velocity. High-frequency content types like social media posts or news articles require more frequent monitoring, while long-form content like whitepapers might need longer measurement periods to accurately assess performance.

For campaign-based content, establish clear monitoring windows that account for the full conversion cycle. This might mean tracking initial engagement metrics immediately, but delaying conversion analysis until your typical sales cycle has had time to complete. This prevents premature optimization decisions based on incomplete data.

When implementing new AI content generation tools, it’s important to establish a baseline measurement period. This gives you comparative data to accurately assess whether the AI-generated content is outperforming previous content approaches. During this initial phase, monitor more frequently to catch any implementation issues or necessary adjustments to your AI parameters.

Regular monitoring should also include periodic reviews of your measurement framework itself. As business objectives shift or market conditions change, you may need to adjust which metrics matter most for your content strategy.

Why does attribution matter for AI content performance tracking?

Attribution matters for AI content performance tracking because it connects content investments to business outcomes, enables accurate ROI calculation, informs resource allocation, and provides feedback for AI model improvement. Without proper attribution, companies cannot determine which content elements drive value, leading to misguided optimization and wasted resources. Attribution models map the customer journey, giving appropriate credit to each content touchpoint that influences conversion.

The challenge with attribution is that modern customer journeys are rarely linear. A prospect might encounter your AI-generated content across multiple channels over weeks or months before converting. Simple attribution models like “last click” fail to capture this complexity, potentially undervaluing content that plays a critical role early in the decision process.

Advanced AI tracking systems address this through multi-touch attribution models that use machine learning to weight the importance of each interaction. These models can recognize patterns across thousands of customer journeys to determine the typical influence of different content types at various funnel stages.

Attribution becomes even more complex when tracking offline conversions that were influenced by online content. AI systems can help bridge this gap by identifying correlations between online engagement patterns and offline activities, creating probabilistic models that estimate content contribution to overall conversion rates.

For AI content generation specifically, attribution provides the feedback loop necessary for system improvement. By connecting content characteristics to performance outcomes, the AI can refine its understanding of what works for your specific audience and business goals.

What integrations enhance AI content performance monitoring?

Integrations that enhance AI content performance monitoring include CRM systems, marketing automation platforms, web analytics tools, social media insights, e-commerce platforms, and business intelligence solutions. These connections create a unified data ecosystem that provides comprehensive visibility into content performance across the entire customer journey. The most valuable integrations enable bidirectional data flow, allowing performance insights to automatically inform content generation parameters.

CRM integrations are particularly valuable as they connect content engagement to specific customer profiles, enabling personalization based on individual behavior patterns and preferences. When your AI content generation system can access this data, it can create targeted content variations that address specific customer needs at each journey stage.

Marketing automation platform integrations extend your tracking capabilities by capturing email engagement, nurture path progression, and lead scoring data. This mid-funnel performance information helps evaluate how effectively your content moves prospects toward conversion, not just how it attracts initial attention.

For e-commerce businesses, product database and inventory management system integrations allow content performance to be tracked in relation to specific product performance. This enables sophisticated analyses like identifying which content approaches work best for different product categories or price points.

The most advanced setups incorporate data warehouse solutions that centralize information from all connected systems. This creates a single source of truth for content performance and enables complex cross-system analyses that would be impossible with siloed data.

API-based integrations with emerging channels and platforms ensure your performance monitoring remains comprehensive as new content distribution opportunities arise. This adaptability is essential for maintaining accurate performance assessment in the rapidly evolving digital landscape.

By combining these integrations, you create an ecosystem where content performance data flows seamlessly between systems, enabling truly data-driven content strategy. Learn more about effective content performance monitoring by exploring comprehensive platform solutions.

Conclusion

Effective tracking of AI-generated content requires a sophisticated approach that combines the right metrics, analysis methods, monitoring cadences, attribution models, and system integrations. By implementing comprehensive performance tracking, you gain the insights needed to continuously improve content quality and business impact.

The most successful organizations treat content performance tracking as an ongoing process rather than a one-time implementation. They regularly review and refine their tracking frameworks to align with evolving business goals and market conditions. This adaptive approach ensures that AI content generation tools deliver maximum value.

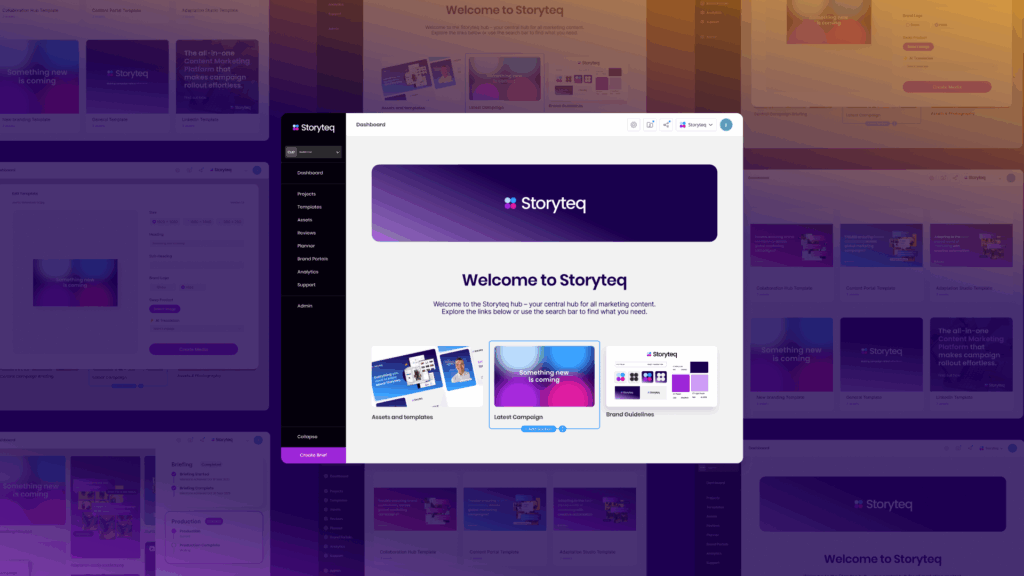

At Storyteq, we understand the complexities of tracking AI-generated content performance across the entire content lifecycle. Our platforms help you not only create content at scale but also measure its effectiveness through integrated analytics and optimization tools. By combining creative automation with performance tracking, we help you deliver content that consistently drives meaningful business results.

Frequently Asked Questions

How do I get started with implementing an AI content performance tracking system?

Begin by identifying your primary business objectives and selecting 3-5 key metrics that directly align with these goals. Next, audit your existing analytics tools to determine integration capabilities with AI content platforms. Start small by tracking one content type or channel, establish baseline measurements for 30-60 days, then gradually expand your tracking scope. Consider implementing a phased approach: first tracking basic engagement, then conversion metrics, and finally setting up more complex attribution models as your team develops expertise.

What are common mistakes companies make when measuring AI-generated content performance?

The most frequent mistakes include tracking too many metrics simultaneously (causing analysis paralysis), setting unrealistic performance expectations without proper benchmarking, ignoring content-specific context when interpreting data, and making optimization decisions too quickly before gathering sufficient data. Another critical error is measuring only immediate performance without considering long-term impact, especially for evergreen content. Finally, many organizations fail to properly communicate performance insights across teams, limiting the practical application of valuable data.

How can I troubleshoot when my AI-generated content isn't performing as expected?

First, verify your tracking implementation is correctly capturing all user interactions. Next, segment your performance data by audience characteristics, distribution channels, and content types to identify specific underperforming elements rather than making broad assumptions. Review your AI generation parameters to ensure they align with your target audience's preferences and needs. Consider conducting A/B tests with variations in tone, format, or calls-to-action to isolate problem areas. Finally, gather qualitative feedback through user surveys or interviews to uncover issues that quantitative metrics might miss.

What's the best way to interpret conflicting metrics in AI content performance?

When facing conflicting metrics, prioritize those most directly tied to your primary business objectives. Create a weighted scoring system that values high-priority metrics more heavily in your evaluation framework. Look for patterns across multiple content pieces to identify whether conflicts are outliers or systematic issues. Consider the full conversion journey—content that drives lower immediate engagement but higher ultimate conversion rates may be more valuable than high-engagement content with poor conversion. Finally, use cohort analysis to determine how metrics evolve over time, as some content delivers better long-term value despite weaker initial performance.

How should my tracking approach change as we scale our AI content production?

As content volume increases, shift from manual analysis to automated reporting with exception-based alerts that flag significant performance deviations. Implement content tagging systems that allow for automated categorization and comparative analysis across similar content types. Consider deploying machine learning models that can identify performance patterns across large content sets and recommend optimization opportunities. Establish clear governance around which metrics different stakeholders monitor, with executive dashboards focusing on business outcomes while content teams access more detailed creative performance data.

What future trends should we anticipate in AI content performance tracking?

Expect increased integration of emotional and psychological response metrics through advanced sentiment analysis and biometric tracking. Predictive analytics will evolve to forecast content performance before publication based on historical patterns. Privacy-focused tracking solutions will become essential as third-party cookies phase out, with first-party data and contextual analytics gaining importance. We'll also see greater emphasis on measuring content's environmental impact through digital carbon footprint tracking. Finally, augmented reality and voice interaction metrics will expand as AI content moves beyond traditional formats.

How can I effectively communicate AI content performance data to different stakeholders?

Customize reporting formats based on stakeholder needs: executives typically need high-level KPIs tied to business outcomes, while creative teams benefit from detailed performance breakdowns by content elements. Use visual storytelling techniques with clear data visualizations rather than raw numbers. Contextualize metrics with competitive benchmarks and historical trends to provide meaningful reference points. Schedule regular cross-functional reviews where data analysts can explain insights and answer questions. Finally, always pair performance data with specific, actionable recommendations to ensure metrics translate into practical improvements.